Random forest machine learning pdf

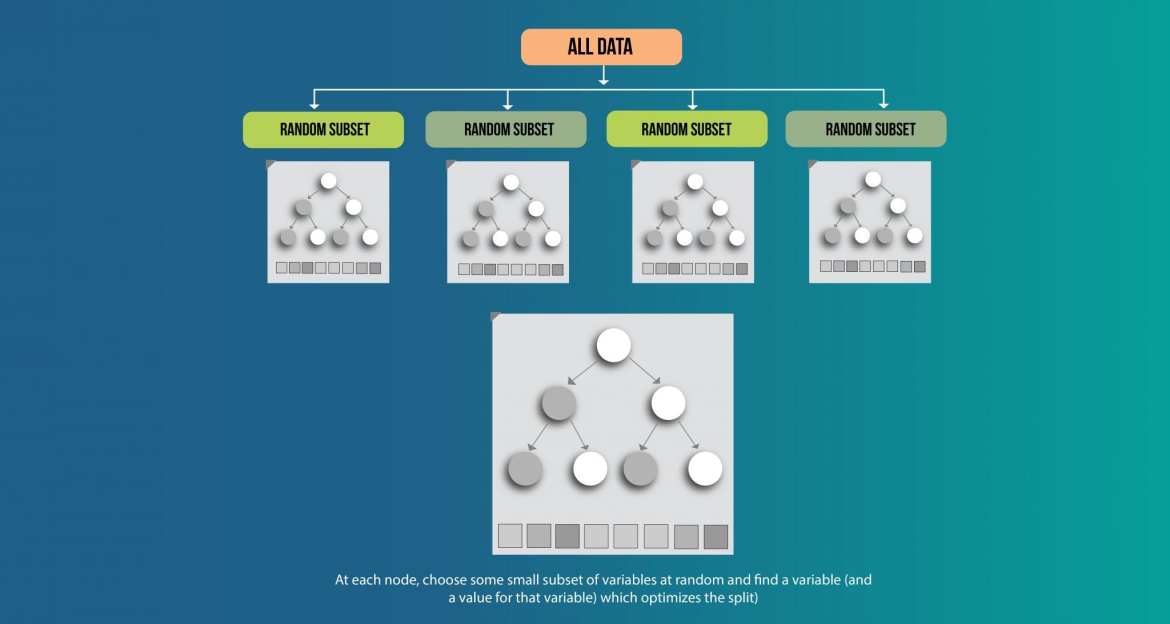

Visualization from scikit-learn.org illustrating decision boundaries and modeling capacity of a single decision tree, a random forest and some other techniques.

ABSTRACT Data analysis and machine learning have become an integrative part of the modern scientic methodology, offering automated procedures for the prediction of a phenomenon based on past observations, un-

Machine Learning Mastery is a perfect blend of math, statistics, and computer science contexts packaged in a practical approach to learning the key points of Machine Learning. This is a great book for more than curious Engineers and Manager types who want a clear, rich, and fact-filled summary of the field of Machine Learning.

• classification: SVM, nearest neighbors, random forest, • MLlib is a standard component of Spark providing machine learning primitives on top of Spark. • MLlib is also comparable to or even better than other libraries specialized in large-scale machine learning. 24. Why MLlib? • Scalability • Performance • User-friendly APIs • Integration with Spark and its other components

Random forest (Breiman, 2001) is an ensemble of unpruned classification or regression trees, induced from bootstrap samples of the training data, using random feature selection in …

21/02/2013 · Random forests, aka decision forests, and ensemble methods. Slides available at: http://www.cs.ubc.ca/~nando/540-2013/… Course taught in 2013 at UBC by Nando de

Keywords- detection; shadowsocks; random forest algorithm; machine learning I. INTRODUCTION With the number of requirements of oversea news is increasing in recent years, the news even contain sensitive information in politics, economics, democratic, financial, technology and so forth. In order to get around the firewall in this country and to archive more news, more and more people has learnt

Machine learning is that domain of computational intelligence which is concerned with the question of how to construct computer programs that automatically improve with experience. [16] Reductionist attitude: ML is just a buzzword which equates to statistics plus marketing Positive attitude: ML paved the way to the treatment of real problems related to data analysis, sometimes overlooked by

Introduction to Random forest – Simplified Tavish Srivastava , June 10, 2014 With increase in computational power, we can now choose algorithms which perform very intensive calculations.

Some different ensemble learning approaches based on artificial neural networks, kernel principal component analysis (KPCA), decision trees with Boosting, random forest and automatic design of multiple classifier systems, are proposed to efficiently identify land cover objects.

Random Forest is one of the most popular and most powerful machine learning algorithms. It is a type of ensemble machine learning algorithm called Bootstrap Aggregation or bagging. In this post you will discover the Bagging ensemble algorithm and the Random Forest algorithm for predictive modeling

This is a classic machine learning data set and is described more fully in the 1994 book “Machine learning, Neural and Statistical Classification” editors Michie, D., Spiegelhalter, D.J. and Taylor, C.C.

A random forests quantile classifier for class imbalanced data. Ishwaran H. and Lu M. (2017). Standard errors and confidence intervals for variable importance in random forest …

Implementation and Evaluation of a Random Forest Machine Learning Algorithm Viachaslau Sazonau University of Manchester, Oxford Road, Manchester, M13 9PL,UK

Deep Neural Decision Forests microsoft.com

The Random Forest Based Detection of Shadowsock’s Traffic

The learning algorithm used in our paper is random forest. The time series data is acquired, The time series data is acquired, smoothed and technical indicators are extracted.

Addressing random forests to learn both, proper repre- sentations of the input data and the final classifiers in a joint manner is an open research field that has received little at-

2 1. Random Forests 1.1 Introduction Significant improvements in classification accuracy have resulted from growing an ensemble of trees and letting them vote for the most popular class.

Random Forests, and Neural Networks which can be used for market prediction. Examples include Kim Examples include Kim (2003), Cao and Tay (2003), Huang, Nakamori and …

Random Forest for Bioinformatics Yanjun Qi 1 Introduction Modern biology has experienced an increasing use of machine learning techniques for large scale and complex biological data analysis.

Introduction to decision trees and random forests Ned Horning American Museum of Natural History’s Center for Biodiversity and Conservation horning@amnh.org

Extreme Machine Learning with GPUs John Canny Computer Science Division University of California, Berkeley GTC, March, 2014

Introduction Construction R functions Variable importance Tests for variable importance Conditional importance Summary References Why and how to use random forest

Random forests or random decision forests are an ensemble learning method for classification, regression and other tasks that operates by constructing a multitude of decision trees at training time and outputting the class that is the mode of the classes (classification) or mean prediction (regression) of the individual trees.

Ensemble Machine Learning in Python: Random Forest, AdaBoost 4.6 (557 ratings) Course Ratings are calculated from individual students’ ratings and a variety of other signals, like age of rating and reliability, to ensure that they reflect course quality fairly and accurately.

1 Paper 2521-2018 Claim Risk Scoring using Survival Analysis Framework and Machine Learning with Random Forest Yuriy Chechulin, Jina Qu, Terrance D’souza

memory-based learning, random forests, de-cision trees, bagged trees, boosted trees, and boosted stumps. We also examine the e ect that calibrating the models via Platt Scaling and Isotonic Regression has on their perfor-mance. An important aspect of our study is the use of a variety of performance criteria to evaluate the learning methods. 1. Introduction There are few comprehensive empirical

Random Forest in Machine Learning Random forest handles non-linearity by exploiting correlation between the features of data-point/experiment. With training data, that has correlations between the features, Random Forest method is a better choice for classification or regression.

Image Classification using Random Forests and Ferns Anna Bosch Computer Vision Group University of Girona aboschr@eia.udg.es Andrew Zisserman Dept. of Engineering Science

Generation of Random Forest in Machine Learning What is the training data for a Random Forest in Machine Learning ? Training data is an array of vectors in the N-dimension space.

2 2 Method Random forests is a popular ensemble method invented by Breiman and Cutler. It was chosen for this contest because of the many advantages it offers.

I think this is a great question, but asking in the wrong place. It is really a methods question and/or a request for a package recommendation.

This paper investigates and reports the use of random forest machine learning algorithm in classification of phishing attacks, with the major objective of developing an improved phishing email

Random Forest is a powerful machine learning algorithm that allows you to create models that can give good overall accuracy with making new predictions. This is achieved because Random Forest is an ensemble machine learning technique that builds and uses many tens or hundreds of decision trees, all created in a slightly different way. Each of these decision trees is used to make a prediction

Trees and Random Forests . Adele Cutler . Professor, Mathematics and Statistics . Utah State University . This research is partially supported by NIH 1R15AG037392-01

Enhancement of Web Proxy Caching Using Random Forest Machine Learning Technique. Julian Benadit.P1, Sagayaraj Francis.F2, Nadhiya.M3 1 Research scholar,

Package ‘randomForest’ March 25, 2018 Title Breiman and Cutler’s Random Forests for Classification and Regression Version 4.6-14 Date 2018-03-22

Regression and two state-of-the-art Machine Learning algorithms: Random Forest (RF) and Gradient Boosting (GB). The ROC-AUC score (presented in the following section) equals to 0.75 for the Logit model, reaches 0.83 for RF and goes up to 0.84for GB. The gain in prediction quality is obvious with a gain of up to 9% in ROC-AUC score. In parallel, the same happens with the Gini score with a gain

However, these variability concerns were potentially obscured because of an interesting feature of those benchmarking datasets extracted from the UCI machine learning repository for testing: all these datasets are hard to overfit using tree-structured methods. This raises issues about the scope of the repository.With this as motivation, and coupled with experience from boosting methods, we

Machine learning • Learning/training: build a classification or regression rule from a set of samples • Prediction: assign a class or value to new samples

Machine learning is the process of developing, testing, and applying predictive algorithms to achieve this goal. Make sure to familiarize yourself with course 3 of this specialization before diving into these machine learning concepts. Building on Course 3, which introduces students to integral supervised machine learning concepts, this course will provide an overview of many additional

Building a Random Forest with SAS coursera.org

H2O includes many common machine learning algorithms, such as generalized linear modeling (linear regression, logistic regression, etc.), Na ve Bayes, principal components analysis, k-means clustering, and …

An Introduction to Ensemble Learning in Credit Risk Modelling October 15, 2014 Han Sheng Sun, BMO Zi Jin, Wells Fargo . 2 Disclaimer “The opinions expressed in this presentation and on the following slides are solely those of the presenters, and are not necessarily those of the employers of the presenters (BMO or Wells Fargo). The methods presented are not necessarily in use at BMO or …

General features of a random forest: If original feature vector has features ,x −. EßáßE‘. “. ♦ Each tree uses a random selection of 7¸ .È – tasting beer randy mosher pdf download ECE591Q Machine Learning Journal Paper. Fall 2005. Implementation of Breiman’s Random Forest Machine Learning Algorithm Frederick Livingston

Random Forests, the number of decision trees was set to 500 to make sure that every input observation was predicted enough times by multiple trees, which helps to prevent overfitting. Additive nonparametric regression was implemented using the package mgcv in R, which has

Seeing the forest for the trees NadeleFlynn -Presentation to RenR690 April 4, 2018 RandomForest: Machine Learning using Decisions Trees Machine learning… for pick

Such applications have traditionally been addressed by different, supervised or unsupervised machine learning techniques. However, in this book, diverse learning tasks including regression, classification and semi-supervised learning are all seen as instances of the same general decision forest model.

Implementation of Breiman’s Random Forest Machine Learning Algorithm Frederick Livingston Abstract This research provides tools for exploring Breiman’s Random Forest algorithm. This paper will focus on the development, the verification, and the significance of variable importance. Introduction A classical machine learner is developed by collecting samples of data to represent the entire

class algorithms such as Random Forest, Gradient Boosting, and Deep Learning at scale. Customers can build thousands of models and Customers can build thousands of models and compare them to get the best prediction results.

Machine Learning for lithology classification Random Forests partial dependency plots for QHV •Indicates the relative influence of a single variable on class

Market Making with Machine Learning Methods Kapil Kanagal Yu Wu Kevin Chen {kkanagal,wuyu8,kchen42}@stanford.edu June 10, 2017 Contents 1 Introduction 2

Automated Bitcoin Trading via Machine Learning Algorithms Isaac Madan Department of Computer Science Stanford University Stanford, CA 94305 imadan@stanford.edu Shaurya Saluja Department of Computer Science Stanford University Stanford, CA 94305 shaurya@stanford.edu Aojia Zhao Department of Computer Science Stanford University Stanford, CA 94305 aojia93@stanford.edu …

EXAMENSARBETE INOM TEKNIK, GRUNDNIVÅ, 15 HP STOCKHOLM, SVERIGE 2016 Technical Analysis inspired Machine Learning for Stock Market Data GUSTAV KIHLSTRÖM

One possible way is by making use of the machine learning method Random Forest. Namely, a forest is just a collection of trees… Namely, a forest is just a collection of trees… Start Free Course

Python Code R Code Types Machine Learning Algorithms ( Python and R Codes) Supervised Learning Unsupervised Learning Reinforcement Learning Decision Tree Random Forest

Implementation of Breiman’s Random Forest Machine Learning

The course will also introduce a range of model based and algorithmic machine learning methods including regression, classification trees, Naive Bayes, and random forests. The course will cover the complete process of building prediction functions including data …

Decision Forests Microsoft Research

Machine Learning Algorithms Analytics Discussions

Image Classification using Random Forests and Ferns

Random Forest in Machine Learning Tutorialkart.com

Kaggle R Tutorial on Machine Learning (practice) DataCamp

Random forests classification description

https://gl.wikipedia.org/wiki/Random_Forest

R – Package randomForest

– Random forest Wikipedia

machine learning Balanced Random Forest in R – Stack

Claim Risk Scoring Using Survival Analysis Framework and

Random Forest University of California Berkeley

Trees and Random Forests Utah State University

Using Random Forest to Learn Imbalanced Data

Trees and Random Forests . Adele Cutler . Professor, Mathematics and Statistics . Utah State University . This research is partially supported by NIH 1R15AG037392-01

Keywords- detection; shadowsocks; random forest algorithm; machine learning I. INTRODUCTION With the number of requirements of oversea news is increasing in recent years, the news even contain sensitive information in politics, economics, democratic, financial, technology and so forth. In order to get around the firewall in this country and to archive more news, more and more people has learnt

Introduction to decision trees and random forests Ned Horning American Museum of Natural History’s Center for Biodiversity and Conservation horning@amnh.org

Extreme Machine Learning with GPUs John Canny Computer Science Division University of California, Berkeley GTC, March, 2014

Automated Bitcoin Trading via Machine Learning Algorithms Isaac Madan Department of Computer Science Stanford University Stanford, CA 94305 imadan@stanford.edu Shaurya Saluja Department of Computer Science Stanford University Stanford, CA 94305 shaurya@stanford.edu Aojia Zhao Department of Computer Science Stanford University Stanford, CA 94305 aojia93@stanford.edu …

This is a classic machine learning data set and is described more fully in the 1994 book “Machine learning, Neural and Statistical Classification” editors Michie, D., Spiegelhalter, D.J. and Taylor, C.C.

Machine Learning Mastery is a perfect blend of math, statistics, and computer science contexts packaged in a practical approach to learning the key points of Machine Learning. This is a great book for more than curious Engineers and Manager types who want a clear, rich, and fact-filled summary of the field of Machine Learning.

Image Classification using Random Forests and Ferns Anna Bosch Computer Vision Group University of Girona aboschr@eia.udg.es Andrew Zisserman Dept. of Engineering Science

Visualization from scikit-learn.org illustrating decision boundaries and modeling capacity of a single decision tree, a random forest and some other techniques.

The course will also introduce a range of model based and algorithmic machine learning methods including regression, classification trees, Naive Bayes, and random forests. The course will cover the complete process of building prediction functions including data …

An Introduction to Ensemble Learning in Credit Risk Modelling October 15, 2014 Han Sheng Sun, BMO Zi Jin, Wells Fargo . 2 Disclaimer “The opinions expressed in this presentation and on the following slides are solely those of the presenters, and are not necessarily those of the employers of the presenters (BMO or Wells Fargo). The methods presented are not necessarily in use at BMO or …

The Random Forest Based Detection of Shadowsock’s Traffic

Image Classification using Random Forests and Ferns

Random Forests, the number of decision trees was set to 500 to make sure that every input observation was predicted enough times by multiple trees, which helps to prevent overfitting. Additive nonparametric regression was implemented using the package mgcv in R, which has

Implementation of Breiman’s Random Forest Machine Learning Algorithm Frederick Livingston Abstract This research provides tools for exploring Breiman’s Random Forest algorithm. This paper will focus on the development, the verification, and the significance of variable importance. Introduction A classical machine learner is developed by collecting samples of data to represent the entire

2 2 Method Random forests is a popular ensemble method invented by Breiman and Cutler. It was chosen for this contest because of the many advantages it offers.

1 Paper 2521-2018 Claim Risk Scoring using Survival Analysis Framework and Machine Learning with Random Forest Yuriy Chechulin, Jina Qu, Terrance D’souza

• classification: SVM, nearest neighbors, random forest, • MLlib is a standard component of Spark providing machine learning primitives on top of Spark. • MLlib is also comparable to or even better than other libraries specialized in large-scale machine learning. 24. Why MLlib? • Scalability • Performance • User-friendly APIs • Integration with Spark and its other components

Machine learning is that domain of computational intelligence which is concerned with the question of how to construct computer programs that automatically improve with experience. [16] Reductionist attitude: ML is just a buzzword which equates to statistics plus marketing Positive attitude: ML paved the way to the treatment of real problems related to data analysis, sometimes overlooked by

General features of a random forest: If original feature vector has features ,x −. EßáßE‘. “. ♦ Each tree uses a random selection of 7¸ .È

Random Forest Machine Learning in R Python and SQL Part 1

Image Classification using Random Forests and Ferns

This is a classic machine learning data set and is described more fully in the 1994 book “Machine learning, Neural and Statistical Classification” editors Michie, D., Spiegelhalter, D.J. and Taylor, C.C.

class algorithms such as Random Forest, Gradient Boosting, and Deep Learning at scale. Customers can build thousands of models and Customers can build thousands of models and compare them to get the best prediction results.

Regression and two state-of-the-art Machine Learning algorithms: Random Forest (RF) and Gradient Boosting (GB). The ROC-AUC score (presented in the following section) equals to 0.75 for the Logit model, reaches 0.83 for RF and goes up to 0.84for GB. The gain in prediction quality is obvious with a gain of up to 9% in ROC-AUC score. In parallel, the same happens with the Gini score with a gain

2 2 Method Random forests is a popular ensemble method invented by Breiman and Cutler. It was chosen for this contest because of the many advantages it offers.

Python Code R Code Types Machine Learning Algorithms ( Python and R Codes) Supervised Learning Unsupervised Learning Reinforcement Learning Decision Tree Random Forest

The learning algorithm used in our paper is random forest. The time series data is acquired, The time series data is acquired, smoothed and technical indicators are extracted.

Market Making with Machine Learning Methods Kapil Kanagal Yu Wu Kevin Chen {kkanagal,wuyu8,kchen42}@stanford.edu June 10, 2017 Contents 1 Introduction 2

H2O includes many common machine learning algorithms, such as generalized linear modeling (linear regression, logistic regression, etc.), Na ve Bayes, principal components analysis, k-means clustering, and …

Random Forests, the number of decision trees was set to 500 to make sure that every input observation was predicted enough times by multiple trees, which helps to prevent overfitting. Additive nonparametric regression was implemented using the package mgcv in R, which has

Table of Contents H2O

Decision Forests Microsoft Research

An Introduction to Ensemble Learning in Credit Risk Modelling October 15, 2014 Han Sheng Sun, BMO Zi Jin, Wells Fargo . 2 Disclaimer “The opinions expressed in this presentation and on the following slides are solely those of the presenters, and are not necessarily those of the employers of the presenters (BMO or Wells Fargo). The methods presented are not necessarily in use at BMO or …

Machine learning • Learning/training: build a classification or regression rule from a set of samples • Prediction: assign a class or value to new samples

Trees and Random Forests . Adele Cutler . Professor, Mathematics and Statistics . Utah State University . This research is partially supported by NIH 1R15AG037392-01

Implementation of Breiman’s Random Forest Machine Learning Algorithm Frederick Livingston Abstract This research provides tools for exploring Breiman’s Random Forest algorithm. This paper will focus on the development, the verification, and the significance of variable importance. Introduction A classical machine learner is developed by collecting samples of data to represent the entire

class algorithms such as Random Forest, Gradient Boosting, and Deep Learning at scale. Customers can build thousands of models and Customers can build thousands of models and compare them to get the best prediction results.

Random Forest in Machine Learning tutorialkart.com

Trees and Random Forests Utah State University

Random Forest is one of the most popular and most powerful machine learning algorithms. It is a type of ensemble machine learning algorithm called Bootstrap Aggregation or bagging. In this post you will discover the Bagging ensemble algorithm and the Random Forest algorithm for predictive modeling

Introduction to Random forest – Simplified Tavish Srivastava , June 10, 2014 With increase in computational power, we can now choose algorithms which perform very intensive calculations.

2 2 Method Random forests is a popular ensemble method invented by Breiman and Cutler. It was chosen for this contest because of the many advantages it offers.

Machine learning • Learning/training: build a classification or regression rule from a set of samples • Prediction: assign a class or value to new samples

Visualization from scikit-learn.org illustrating decision boundaries and modeling capacity of a single decision tree, a random forest and some other techniques.

memory-based learning, random forests, de-cision trees, bagged trees, boosted trees, and boosted stumps. We also examine the e ect that calibrating the models via Platt Scaling and Isotonic Regression has on their perfor-mance. An important aspect of our study is the use of a variety of performance criteria to evaluate the learning methods. 1. Introduction There are few comprehensive empirical

This paper investigates and reports the use of random forest machine learning algorithm in classification of phishing attacks, with the major objective of developing an improved phishing email

21/02/2013 · Random forests, aka decision forests, and ensemble methods. Slides available at: http://www.cs.ubc.ca/~nando/540-2013/… Course taught in 2013 at UBC by Nando de

Addressing random forests to learn both, proper repre- sentations of the input data and the final classifiers in a joint manner is an open research field that has received little at-

2 1. Random Forests 1.1 Introduction Significant improvements in classification accuracy have resulted from growing an ensemble of trees and letting them vote for the most popular class.

The course will also introduce a range of model based and algorithmic machine learning methods including regression, classification trees, Naive Bayes, and random forests. The course will cover the complete process of building prediction functions including data …

H2O includes many common machine learning algorithms, such as generalized linear modeling (linear regression, logistic regression, etc.), Na ve Bayes, principal components analysis, k-means clustering, and …

However, these variability concerns were potentially obscured because of an interesting feature of those benchmarking datasets extracted from the UCI machine learning repository for testing: all these datasets are hard to overfit using tree-structured methods. This raises issues about the scope of the repository.With this as motivation, and coupled with experience from boosting methods, we

Table of Contents H2O

Hemant Ishwaran CCS

Extreme Machine Learning with GPUs John Canny Computer Science Division University of California, Berkeley GTC, March, 2014

A random forests quantile classifier for class imbalanced data. Ishwaran H. and Lu M. (2017). Standard errors and confidence intervals for variable importance in random forest …

Such applications have traditionally been addressed by different, supervised or unsupervised machine learning techniques. However, in this book, diverse learning tasks including regression, classification and semi-supervised learning are all seen as instances of the same general decision forest model.

H2O includes many common machine learning algorithms, such as generalized linear modeling (linear regression, logistic regression, etc.), Na ve Bayes, principal components analysis, k-means clustering, and …

Visualization from scikit-learn.org illustrating decision boundaries and modeling capacity of a single decision tree, a random forest and some other techniques.

Random Forest is one of the most popular and most powerful machine learning algorithms. It is a type of ensemble machine learning algorithm called Bootstrap Aggregation or bagging. In this post you will discover the Bagging ensemble algorithm and the Random Forest algorithm for predictive modeling

random forest machine-learning methods

Implementation of Breiman’s Random Forest Machine Learning

Random Forests, and Neural Networks which can be used for market prediction. Examples include Kim Examples include Kim (2003), Cao and Tay (2003), Huang, Nakamori and …

Implementation and Evaluation of a Random Forest Machine Learning Algorithm Viachaslau Sazonau University of Manchester, Oxford Road, Manchester, M13 9PL,UK

However, these variability concerns were potentially obscured because of an interesting feature of those benchmarking datasets extracted from the UCI machine learning repository for testing: all these datasets are hard to overfit using tree-structured methods. This raises issues about the scope of the repository.With this as motivation, and coupled with experience from boosting methods, we

Random Forest in Machine Learning Random forest handles non-linearity by exploiting correlation between the features of data-point/experiment. With training data, that has correlations between the features, Random Forest method is a better choice for classification or regression.

The learning algorithm used in our paper is random forest. The time series data is acquired, The time series data is acquired, smoothed and technical indicators are extracted.

An Introduction to Ensemble Learning in Credit Risk Modelling October 15, 2014 Han Sheng Sun, BMO Zi Jin, Wells Fargo . 2 Disclaimer “The opinions expressed in this presentation and on the following slides are solely those of the presenters, and are not necessarily those of the employers of the presenters (BMO or Wells Fargo). The methods presented are not necessarily in use at BMO or …

Introduction To Random Forest Simplified Business Case

Machine Learning Benchmarks and Random Forest Regression

However, these variability concerns were potentially obscured because of an interesting feature of those benchmarking datasets extracted from the UCI machine learning repository for testing: all these datasets are hard to overfit using tree-structured methods. This raises issues about the scope of the repository.With this as motivation, and coupled with experience from boosting methods, we

Generation of Random Forest in Machine Learning What is the training data for a Random Forest in Machine Learning ? Training data is an array of vectors in the N-dimension space.

Python Code R Code Types Machine Learning Algorithms ( Python and R Codes) Supervised Learning Unsupervised Learning Reinforcement Learning Decision Tree Random Forest

Random Forests, the number of decision trees was set to 500 to make sure that every input observation was predicted enough times by multiple trees, which helps to prevent overfitting. Additive nonparametric regression was implemented using the package mgcv in R, which has

An Introduction to Ensemble Learning in Credit Risk Modelling October 15, 2014 Han Sheng Sun, BMO Zi Jin, Wells Fargo . 2 Disclaimer “The opinions expressed in this presentation and on the following slides are solely those of the presenters, and are not necessarily those of the employers of the presenters (BMO or Wells Fargo). The methods presented are not necessarily in use at BMO or …

Trees and Random Forests . Adele Cutler . Professor, Mathematics and Statistics . Utah State University . This research is partially supported by NIH 1R15AG037392-01

The learning algorithm used in our paper is random forest. The time series data is acquired, The time series data is acquired, smoothed and technical indicators are extracted.

Seeing the forest for the trees NadeleFlynn -Presentation to RenR690 April 4, 2018 RandomForest: Machine Learning using Decisions Trees Machine learning… for pick

Random Forest for Bioinformatics Yanjun Qi 1 Introduction Modern biology has experienced an increasing use of machine learning techniques for large scale and complex biological data analysis.

Image Classification using Random Forests and Ferns Anna Bosch Computer Vision Group University of Girona aboschr@eia.udg.es Andrew Zisserman Dept. of Engineering Science

This is a classic machine learning data set and is described more fully in the 1994 book “Machine learning, Neural and Statistical Classification” editors Michie, D., Spiegelhalter, D.J. and Taylor, C.C.

Some different ensemble learning approaches based on artificial neural networks, kernel principal component analysis (KPCA), decision trees with Boosting, random forest and automatic design of multiple classifier systems, are proposed to efficiently identify land cover objects.

Ensemble Machine Learning in Python: Random Forest, AdaBoost 4.6 (557 ratings) Course Ratings are calculated from individual students’ ratings and a variety of other signals, like age of rating and reliability, to ensure that they reflect course quality fairly and accurately.

This paper investigates and reports the use of random forest machine learning algorithm in classification of phishing attacks, with the major objective of developing an improved phishing email

An introduction to random forests univ-toulouse.fr

Machine learning for lithology classification data fusion

Extreme Machine Learning with GPUs John Canny Computer Science Division University of California, Berkeley GTC, March, 2014

Introduction to Random forest – Simplified Tavish Srivastava , June 10, 2014 With increase in computational power, we can now choose algorithms which perform very intensive calculations.

Random forest (Breiman, 2001) is an ensemble of unpruned classification or regression trees, induced from bootstrap samples of the training data, using random feature selection in …

Regression and two state-of-the-art Machine Learning algorithms: Random Forest (RF) and Gradient Boosting (GB). The ROC-AUC score (presented in the following section) equals to 0.75 for the Logit model, reaches 0.83 for RF and goes up to 0.84for GB. The gain in prediction quality is obvious with a gain of up to 9% in ROC-AUC score. In parallel, the same happens with the Gini score with a gain

The learning algorithm used in our paper is random forest. The time series data is acquired, The time series data is acquired, smoothed and technical indicators are extracted.

Implementation and Evaluation of a Random Forest Machine Learning Algorithm Viachaslau Sazonau University of Manchester, Oxford Road, Manchester, M13 9PL,UK

Random forest Wikipedia

Ensemble learning Wikipedia

memory-based learning, random forests, de-cision trees, bagged trees, boosted trees, and boosted stumps. We also examine the e ect that calibrating the models via Platt Scaling and Isotonic Regression has on their perfor-mance. An important aspect of our study is the use of a variety of performance criteria to evaluate the learning methods. 1. Introduction There are few comprehensive empirical

2 1. Random Forests 1.1 Introduction Significant improvements in classification accuracy have resulted from growing an ensemble of trees and letting them vote for the most popular class.

Such applications have traditionally been addressed by different, supervised or unsupervised machine learning techniques. However, in this book, diverse learning tasks including regression, classification and semi-supervised learning are all seen as instances of the same general decision forest model.

However, these variability concerns were potentially obscured because of an interesting feature of those benchmarking datasets extracted from the UCI machine learning repository for testing: all these datasets are hard to overfit using tree-structured methods. This raises issues about the scope of the repository.With this as motivation, and coupled with experience from boosting methods, we

Enhancement of Web Proxy Caching Using Random Forest Machine Learning Technique. Julian Benadit.P1, Sagayaraj Francis.F2, Nadhiya.M3 1 Research scholar,

2 2 Method Random forests is a popular ensemble method invented by Breiman and Cutler. It was chosen for this contest because of the many advantages it offers.

Regression and two state-of-the-art Machine Learning algorithms: Random Forest (RF) and Gradient Boosting (GB). The ROC-AUC score (presented in the following section) equals to 0.75 for the Logit model, reaches 0.83 for RF and goes up to 0.84for GB. The gain in prediction quality is obvious with a gain of up to 9% in ROC-AUC score. In parallel, the same happens with the Gini score with a gain

This paper investigates and reports the use of random forest machine learning algorithm in classification of phishing attacks, with the major objective of developing an improved phishing email

Random Forest is one of the most popular and most powerful machine learning algorithms. It is a type of ensemble machine learning algorithm called Bootstrap Aggregation or bagging. In this post you will discover the Bagging ensemble algorithm and the Random Forest algorithm for predictive modeling

This is a classic machine learning data set and is described more fully in the 1994 book “Machine learning, Neural and Statistical Classification” editors Michie, D., Spiegelhalter, D.J. and Taylor, C.C.

A random forests quantile classifier for class imbalanced data. Ishwaran H. and Lu M. (2017). Standard errors and confidence intervals for variable importance in random forest …

Random Forests, and Neural Networks which can be used for market prediction. Examples include Kim Examples include Kim (2003), Cao and Tay (2003), Huang, Nakamori and …

class algorithms such as Random Forest, Gradient Boosting, and Deep Learning at scale. Customers can build thousands of models and Customers can build thousands of models and compare them to get the best prediction results.

Ensemble Machine Learning in Python Random Forest

Deep Neural Decision Forests microsoft.com

1 Paper 2521-2018 Claim Risk Scoring using Survival Analysis Framework and Machine Learning with Random Forest Yuriy Chechulin, Jina Qu, Terrance D’souza

Machine Learning for lithology classification Random Forests partial dependency plots for QHV •Indicates the relative influence of a single variable on class

This paper investigates and reports the use of random forest machine learning algorithm in classification of phishing attacks, with the major objective of developing an improved phishing email

Random Forests, and Neural Networks which can be used for market prediction. Examples include Kim Examples include Kim (2003), Cao and Tay (2003), Huang, Nakamori and …

Regression and two state-of-the-art Machine Learning algorithms: Random Forest (RF) and Gradient Boosting (GB). The ROC-AUC score (presented in the following section) equals to 0.75 for the Logit model, reaches 0.83 for RF and goes up to 0.84for GB. The gain in prediction quality is obvious with a gain of up to 9% in ROC-AUC score. In parallel, the same happens with the Gini score with a gain

Random Forest in Machine Learning Random forest handles non-linearity by exploiting correlation between the features of data-point/experiment. With training data, that has correlations between the features, Random Forest method is a better choice for classification or regression.

Generation of Random Forest in Machine Learning What is the training data for a Random Forest in Machine Learning ? Training data is an array of vectors in the N-dimension space.

Package ‘randomForest’ March 25, 2018 Title Breiman and Cutler’s Random Forests for Classification and Regression Version 4.6-14 Date 2018-03-22

Visualization from scikit-learn.org illustrating decision boundaries and modeling capacity of a single decision tree, a random forest and some other techniques.

Using Random Forest to Learn Imbalanced Data

Table of Contents H2O

An Introduction to Ensemble Learning in Credit Risk Modelling October 15, 2014 Han Sheng Sun, BMO Zi Jin, Wells Fargo . 2 Disclaimer “The opinions expressed in this presentation and on the following slides are solely those of the presenters, and are not necessarily those of the employers of the presenters (BMO or Wells Fargo). The methods presented are not necessarily in use at BMO or …

Automated Bitcoin Trading via Machine Learning Algorithms Isaac Madan Department of Computer Science Stanford University Stanford, CA 94305 imadan@stanford.edu Shaurya Saluja Department of Computer Science Stanford University Stanford, CA 94305 shaurya@stanford.edu Aojia Zhao Department of Computer Science Stanford University Stanford, CA 94305 aojia93@stanford.edu …

This paper investigates and reports the use of random forest machine learning algorithm in classification of phishing attacks, with the major objective of developing an improved phishing email

Package ‘randomForest’ March 25, 2018 Title Breiman and Cutler’s Random Forests for Classification and Regression Version 4.6-14 Date 2018-03-22

A random forests quantile classifier for class imbalanced data. Ishwaran H. and Lu M. (2017). Standard errors and confidence intervals for variable importance in random forest …

2 2 Method Random forests is a popular ensemble method invented by Breiman and Cutler. It was chosen for this contest because of the many advantages it offers.

1 Paper 2521-2018 Claim Risk Scoring using Survival Analysis Framework and Machine Learning with Random Forest Yuriy Chechulin, Jina Qu, Terrance D’souza

However, these variability concerns were potentially obscured because of an interesting feature of those benchmarking datasets extracted from the UCI machine learning repository for testing: all these datasets are hard to overfit using tree-structured methods. This raises issues about the scope of the repository.With this as motivation, and coupled with experience from boosting methods, we

Random Forest for Bioinformatics Carnegie Mellon School

Table of Contents H2O

This is a classic machine learning data set and is described more fully in the 1994 book “Machine learning, Neural and Statistical Classification” editors Michie, D., Spiegelhalter, D.J. and Taylor, C.C.

Such applications have traditionally been addressed by different, supervised or unsupervised machine learning techniques. However, in this book, diverse learning tasks including regression, classification and semi-supervised learning are all seen as instances of the same general decision forest model.

Market Making with Machine Learning Methods Kapil Kanagal Yu Wu Kevin Chen {kkanagal,wuyu8,kchen42}@stanford.edu June 10, 2017 Contents 1 Introduction 2

Generation of Random Forest in Machine Learning What is the training data for a Random Forest in Machine Learning ? Training data is an array of vectors in the N-dimension space.

Machine Learning Mastery is a perfect blend of math, statistics, and computer science contexts packaged in a practical approach to learning the key points of Machine Learning. This is a great book for more than curious Engineers and Manager types who want a clear, rich, and fact-filled summary of the field of Machine Learning.

Random Forests, the number of decision trees was set to 500 to make sure that every input observation was predicted enough times by multiple trees, which helps to prevent overfitting. Additive nonparametric regression was implemented using the package mgcv in R, which has

1 Paper 2521-2018 Claim Risk Scoring using Survival Analysis Framework and Machine Learning with Random Forest Yuriy Chechulin, Jina Qu, Terrance D’souza

General features of a random forest: If original feature vector has features ,x −. EßáßE‘. “. ♦ Each tree uses a random selection of 7¸ .È

However, these variability concerns were potentially obscured because of an interesting feature of those benchmarking datasets extracted from the UCI machine learning repository for testing: all these datasets are hard to overfit using tree-structured methods. This raises issues about the scope of the repository.With this as motivation, and coupled with experience from boosting methods, we

Automated Bitcoin Trading via Machine Learning Algorithms Isaac Madan Department of Computer Science Stanford University Stanford, CA 94305 imadan@stanford.edu Shaurya Saluja Department of Computer Science Stanford University Stanford, CA 94305 shaurya@stanford.edu Aojia Zhao Department of Computer Science Stanford University Stanford, CA 94305 aojia93@stanford.edu …

Random Forest in Machine Learning Random forest handles non-linearity by exploiting correlation between the features of data-point/experiment. With training data, that has correlations between the features, Random Forest method is a better choice for classification or regression.

ABSTRACT Data analysis and machine learning have become an integrative part of the modern scientic methodology, offering automated procedures for the prediction of a phenomenon based on past observations, un-

Implementation of Breiman’s Random Forest Machine Learning Algorithm Frederick Livingston Abstract This research provides tools for exploring Breiman’s Random Forest algorithm. This paper will focus on the development, the verification, and the significance of variable importance. Introduction A classical machine learner is developed by collecting samples of data to represent the entire

Random Forest in Machine Learning tutorialkart.com

Machine Learning Algorithms Analytics Discussions

Market Making with Machine Learning Methods Kapil Kanagal Yu Wu Kevin Chen {kkanagal,wuyu8,kchen42}@stanford.edu June 10, 2017 Contents 1 Introduction 2

class algorithms such as Random Forest, Gradient Boosting, and Deep Learning at scale. Customers can build thousands of models and Customers can build thousands of models and compare them to get the best prediction results.

Visualization from scikit-learn.org illustrating decision boundaries and modeling capacity of a single decision tree, a random forest and some other techniques.

Such applications have traditionally been addressed by different, supervised or unsupervised machine learning techniques. However, in this book, diverse learning tasks including regression, classification and semi-supervised learning are all seen as instances of the same general decision forest model.

Python Code R Code Types Machine Learning Algorithms ( Python and R Codes) Supervised Learning Unsupervised Learning Reinforcement Learning Decision Tree Random Forest

Building a Random Forest with SAS coursera.org

Introduction to decision trees and random forests Ned Horning American Museum of Natural History’s Center for Biodiversity and Conservation horning@amnh.org

Using Random Forest to Learn Imbalanced Data

Random Forest is a powerful machine learning algorithm that allows you to create models that can give good overall accuracy with making new predictions. This is achieved because Random Forest is an ensemble machine learning technique that builds and uses many tens or hundreds of decision trees, all created in a slightly different way. Each of these decision trees is used to make a prediction

R – Package randomForest

memory-based learning, random forests, de-cision trees, bagged trees, boosted trees, and boosted stumps. We also examine the e ect that calibrating the models via Platt Scaling and Isotonic Regression has on their perfor-mance. An important aspect of our study is the use of a variety of performance criteria to evaluate the learning methods. 1. Introduction There are few comprehensive empirical

Machine Learning Benchmarks and Random Forest Regression

ABSTRACT Data analysis and machine learning have become an integrative part of the modern scientic methodology, offering automated procedures for the prediction of a phenomenon based on past observations, un-

Machine Learning Benchmarks and Random Forest Regression

Machine learning • Learning/training: build a classification or regression rule from a set of samples • Prediction: assign a class or value to new samples

Implementation and Evaluation of a Random Forest Machine

I think this is a great question, but asking in the wrong place. It is really a methods question and/or a request for a package recommendation.

Random forests classification description

2 2 Method Random forests is a popular ensemble method invented by Breiman and Cutler. It was chosen for this contest because of the many advantages it offers.

Random forest Wikipedia

This paper investigates and reports the use of random forest machine learning algorithm in classification of phishing attacks, with the major objective of developing an improved phishing email

An introduction to random forests univ-toulouse.fr

Using Random Forest to Learn Imbalanced Data

21/02/2013 · Random forests, aka decision forests, and ensemble methods. Slides available at: http://www.cs.ubc.ca/~nando/540-2013/… Course taught in 2013 at UBC by Nando de

Enhancement of Web Proxy Caching Using Random Forest

R – Package randomForest

Kaggle R Tutorial on Machine Learning (practice) DataCamp

Enhancement of Web Proxy Caching Using Random Forest Machine Learning Technique. Julian Benadit.P1, Sagayaraj Francis.F2, Nadhiya.M3 1 Research scholar,

[PDF] Implementation of Breiman s Random Forest Machine

The course will also introduce a range of model based and algorithmic machine learning methods including regression, classification trees, Naive Bayes, and random forests. The course will cover the complete process of building prediction functions including data …

Implementation of Breiman’s Random Forest Machine Learning

Machine Learning in Credit Risk Modeling james.finance

class algorithms such as Random Forest, Gradient Boosting, and Deep Learning at scale. Customers can build thousands of models and Customers can build thousands of models and compare them to get the best prediction results.

Hemant Ishwaran CCS

Random forests classification description

Random Forest is a powerful machine learning algorithm that allows you to create models that can give good overall accuracy with making new predictions. This is achieved because Random Forest is an ensemble machine learning technique that builds and uses many tens or hundreds of decision trees, all created in a slightly different way. Each of these decision trees is used to make a prediction

Table of Contents H2O

Building a Random Forest with SAS coursera.org

2 1. Random Forests 1.1 Introduction Significant improvements in classification accuracy have resulted from growing an ensemble of trees and letting them vote for the most popular class.

Table of Contents H2O

machine learning Balanced Random Forest in R – Stack

Seeing the forest for the trees NadeleFlynn -Presentation to RenR690 April 4, 2018 RandomForest: Machine Learning using Decisions Trees Machine learning… for pick

[PDF] Implementation of Breiman s Random Forest Machine

Claim Risk Scoring Using Survival Analysis Framework and

Such applications have traditionally been addressed by different, supervised or unsupervised machine learning techniques. However, in this book, diverse learning tasks including regression, classification and semi-supervised learning are all seen as instances of the same general decision forest model.

Decision Forests Microsoft Research

RandomForest Machine Learning using Decisions Trees

Extreme Machine Learning with GPUs GTC On-Demand

This paper investigates and reports the use of random forest machine learning algorithm in classification of phishing attacks, with the major objective of developing an improved phishing email

Hemant Ishwaran CCS

Introduction Construction R functions Variable importance Tests for variable importance Conditional importance Summary References Why and how to use random forest

Random Forest for Bioinformatics Carnegie Mellon School

Building a Random Forest with SAS coursera.org

Why and how to use random forest TU Dortmund

The learning algorithm used in our paper is random forest. The time series data is acquired, The time series data is acquired, smoothed and technical indicators are extracted.

An Empirical Comparison of Supervised Learning Algorithms

The Unreasonable Effectiveness of Random Forests – Rants

Such applications have traditionally been addressed by different, supervised or unsupervised machine learning techniques. However, in this book, diverse learning tasks including regression, classification and semi-supervised learning are all seen as instances of the same general decision forest model.

Extreme Machine Learning with GPUs GTC On-Demand

Python Code R Code Types Machine Learning Algorithms ( Python and R Codes) Supervised Learning Unsupervised Learning Reinforcement Learning Decision Tree Random Forest

Ned Horning American Museum of Natural History’s Center

Machine Learning for lithology classification Random Forests partial dependency plots for QHV •Indicates the relative influence of a single variable on class

Decision Forests Microsoft Research

Random Forest University of California Berkeley

Random Forests, and Neural Networks which can be used for market prediction. Examples include Kim Examples include Kim (2003), Cao and Tay (2003), Huang, Nakamori and …

An Introduction to Ensemble Learning in Credit Risk Modelling

Ensemble Machine Learning in Python: Random Forest, AdaBoost 4.6 (557 ratings) Course Ratings are calculated from individual students’ ratings and a variety of other signals, like age of rating and reliability, to ensure that they reflect course quality fairly and accurately.

Machine learning for lithology classification data fusion

machine learning Balanced Random Forest in R – Stack

Machine Learning Algorithms Analytics Discussions

The learning algorithm used in our paper is random forest. The time series data is acquired, The time series data is acquired, smoothed and technical indicators are extracted.

Decision Forests Microsoft Research

Random Forests, the number of decision trees was set to 500 to make sure that every input observation was predicted enough times by multiple trees, which helps to prevent overfitting. Additive nonparametric regression was implemented using the package mgcv in R, which has

Image Classification using Random Forests and Ferns

2 1. Random Forests 1.1 Introduction Significant improvements in classification accuracy have resulted from growing an ensemble of trees and letting them vote for the most popular class.

An introduction to random forests univ-toulouse.fr

Claim Risk Scoring Using Survival Analysis Framework and

Implementation of Breiman’s Random Forest Machine Learning Algorithm Frederick Livingston Abstract This research provides tools for exploring Breiman’s Random Forest algorithm. This paper will focus on the development, the verification, and the significance of variable importance. Introduction A classical machine learner is developed by collecting samples of data to represent the entire

Building a Random Forest with SAS coursera.org

Machine Learning Mastery is a perfect blend of math, statistics, and computer science contexts packaged in a practical approach to learning the key points of Machine Learning. This is a great book for more than curious Engineers and Manager types who want a clear, rich, and fact-filled summary of the field of Machine Learning.

Hemant Ishwaran CCS

Package ‘randomForest’ March 25, 2018 Title Breiman and Cutler’s Random Forests for Classification and Regression Version 4.6-14 Date 2018-03-22

Random Forest for Bioinformatics Carnegie Mellon School

Random Forest is a powerful machine learning algorithm that allows you to create models that can give good overall accuracy with making new predictions. This is achieved because Random Forest is an ensemble machine learning technique that builds and uses many tens or hundreds of decision trees, all created in a slightly different way. Each of these decision trees is used to make a prediction

Ensemble Machine Learning in Python Random Forest

Decision Forests Microsoft Research

• classification: SVM, nearest neighbors, random forest, • MLlib is a standard component of Spark providing machine learning primitives on top of Spark. • MLlib is also comparable to or even better than other libraries specialized in large-scale machine learning. 24. Why MLlib? • Scalability • Performance • User-friendly APIs • Integration with Spark and its other components

Deep Neural Decision Forests microsoft.com

Using Random Forest to Learn Imbalanced Data

A random forests quantile classifier for class imbalanced data. Ishwaran H. and Lu M. (2017). Standard errors and confidence intervals for variable importance in random forest …

Random forest Wikipedia

An Introduction to Ensemble Learning in Credit Risk Modelling

An Empirical Comparison of Supervised Learning Algorithms

Regression and two state-of-the-art Machine Learning algorithms: Random Forest (RF) and Gradient Boosting (GB). The ROC-AUC score (presented in the following section) equals to 0.75 for the Logit model, reaches 0.83 for RF and goes up to 0.84for GB. The gain in prediction quality is obvious with a gain of up to 9% in ROC-AUC score. In parallel, the same happens with the Gini score with a gain

The Random Forest Based Detection of Shadowsock’s Traffic

Random Forest for Bioinformatics Carnegie Mellon School

A random forests quantile classifier for class imbalanced data. Ishwaran H. and Lu M. (2017). Standard errors and confidence intervals for variable importance in random forest …

Implementation of Breiman’s Random Forest Machine Learning

Introduction To Random Forest Simplified Business Case

Enhancement of Web Proxy Caching Using Random Forest

Machine learning is that domain of computational intelligence which is concerned with the question of how to construct computer programs that automatically improve with experience. [16] Reductionist attitude: ML is just a buzzword which equates to statistics plus marketing Positive attitude: ML paved the way to the treatment of real problems related to data analysis, sometimes overlooked by

Random Forest Machine Learning in R Python and SQL Part 1

Package ‘randomForest’ March 25, 2018 Title Breiman and Cutler’s Random Forests for Classification and Regression Version 4.6-14 Date 2018-03-22

Random forest Wikipedia

Machine Learning Algorithms Analytics Discussions

Random Forest University of California Berkeley

This paper investigates and reports the use of random forest machine learning algorithm in classification of phishing attacks, with the major objective of developing an improved phishing email

Random Forest Machine Learning in R Python and SQL Part 1

Predicting borrowers’ chance of defaulting on credit loans

An Empirical Comparison of Supervised Learning Algorithms

Ensemble Machine Learning in Python: Random Forest, AdaBoost 4.6 (557 ratings) Course Ratings are calculated from individual students’ ratings and a variety of other signals, like age of rating and reliability, to ensure that they reflect course quality fairly and accurately.

Random forest Wikipedia

Random Forest for Bioinformatics Carnegie Mellon School

Introduction Construction R functions Variable importance Tests for variable importance Conditional importance Summary References Why and how to use random forest

Image Classification using Random Forests and Ferns

Random Forests, and Neural Networks which can be used for market prediction. Examples include Kim Examples include Kim (2003), Cao and Tay (2003), Huang, Nakamori and …

Predicting borrowers’ chance of defaulting on credit loans

Ensemble learning Wikipedia

Trees and Random Forests . Adele Cutler . Professor, Mathematics and Statistics . Utah State University . This research is partially supported by NIH 1R15AG037392-01

An Introduction to Ensemble Learning in Credit Risk Modelling

Implementation and Evaluation of a Random Forest Machine

Random Forests, and Neural Networks which can be used for market prediction. Examples include Kim Examples include Kim (2003), Cao and Tay (2003), Huang, Nakamori and …

Machine learning for lithology classification data fusion

Random forests classification description

Random forests or random decision forests are an ensemble learning method for classification, regression and other tasks that operates by constructing a multitude of decision trees at training time and outputting the class that is the mode of the classes (classification) or mean prediction (regression) of the individual trees.

Ned Horning American Museum of Natural History’s Center

Enhancement of Web Proxy Caching Using Random Forest Machine Learning Technique. Julian Benadit.P1, Sagayaraj Francis.F2, Nadhiya.M3 1 Research scholar,

Random Forest for Bioinformatics Carnegie Mellon School

An Empirical Comparison of Supervised Learning Algorithms

Machine learning is the process of developing, testing, and applying predictive algorithms to achieve this goal. Make sure to familiarize yourself with course 3 of this specialization before diving into these machine learning concepts. Building on Course 3, which introduces students to integral supervised machine learning concepts, this course will provide an overview of many additional

Decision Forests Microsoft Research

RandomForest Machine Learning using Decisions Trees

Market Making with Machine Learning Methods Kapil Kanagal Yu Wu Kevin Chen {kkanagal,wuyu8,kchen42}@stanford.edu June 10, 2017 Contents 1 Introduction 2

Decision Forests Microsoft Research

Random Forest University of California Berkeley

Machine learning Random forests – YouTube

Ensemble Machine Learning in Python: Random Forest, AdaBoost 4.6 (557 ratings) Course Ratings are calculated from individual students’ ratings and a variety of other signals, like age of rating and reliability, to ensure that they reflect course quality fairly and accurately.

Random Forest University of California Berkeley

Random Forest for Bioinformatics Carnegie Mellon School

Generation of Random Forest in Machine Learning What is the training data for a Random Forest in Machine Learning ? Training data is an array of vectors in the N-dimension space.

Technical Analysis inspired Machine Learning for Stock

[PDF] Implementation of Breiman s Random Forest Machine

Machine learning for lithology classification data fusion

I think this is a great question, but asking in the wrong place. It is really a methods question and/or a request for a package recommendation.

Enhancement of Web Proxy Caching Using Random Forest

machine learning Balanced Random Forest in R – Stack

Why and how to use random forest TU Dortmund

Automated Bitcoin Trading via Machine Learning Algorithms Isaac Madan Department of Computer Science Stanford University Stanford, CA 94305 imadan@stanford.edu Shaurya Saluja Department of Computer Science Stanford University Stanford, CA 94305 shaurya@stanford.edu Aojia Zhao Department of Computer Science Stanford University Stanford, CA 94305 aojia93@stanford.edu …

Claim Risk Scoring Using Survival Analysis Framework and

Introduction to Random forest – Simplified Tavish Srivastava , June 10, 2014 With increase in computational power, we can now choose algorithms which perform very intensive calculations.

Machine learning Random forests – YouTube

random forest machine-learning methods

RandomForest Machine Learning using Decisions Trees

This is a classic machine learning data set and is described more fully in the 1994 book “Machine learning, Neural and Statistical Classification” editors Michie, D., Spiegelhalter, D.J. and Taylor, C.C.

Kaggle R Tutorial on Machine Learning (practice) DataCamp

Decision Forests Microsoft Research

random forest machine-learning methods

Machine learning is that domain of computational intelligence which is concerned with the question of how to construct computer programs that automatically improve with experience. [16] Reductionist attitude: ML is just a buzzword which equates to statistics plus marketing Positive attitude: ML paved the way to the treatment of real problems related to data analysis, sometimes overlooked by

Table of Contents H2O

Implementation of Breiman’s Random Forest Machine Learning

This paper investigates and reports the use of random forest machine learning algorithm in classification of phishing attacks, with the major objective of developing an improved phishing email

Using Random Forest to Learn Imbalanced Data

Predicting borrowers’ chance of defaulting on credit loans

An Empirical Comparison of Supervised Learning Algorithms

Implementation and Evaluation of a Random Forest Machine Learning Algorithm Viachaslau Sazonau University of Manchester, Oxford Road, Manchester, M13 9PL,UK

Technical Analysis inspired Machine Learning for Stock

Introduction to decision trees and random forests Ned Horning American Museum of Natural History’s Center for Biodiversity and Conservation horning@amnh.org

random forest machine-learning methods

Market Making with Machine Learning Methods Kapil Kanagal Yu Wu Kevin Chen {kkanagal,wuyu8,kchen42}@stanford.edu June 10, 2017 Contents 1 Introduction 2

Machine learning Random forests – YouTube

Machine Learning Algorithms Analytics Discussions

Random Forest in Machine Learning Tutorialkart.com

Random Forest for Bioinformatics Yanjun Qi 1 Introduction Modern biology has experienced an increasing use of machine learning techniques for large scale and complex biological data analysis.

Why and how to use random forest TU Dortmund

2 2 Method Random forests is a popular ensemble method invented by Breiman and Cutler. It was chosen for this contest because of the many advantages it offers.

Ned Horning American Museum of Natural History’s Center

Why and how to use random forest TU Dortmund

Machine learning Random forests – YouTube